PLAY

**AI and HPC Acceleration at Its Apex**

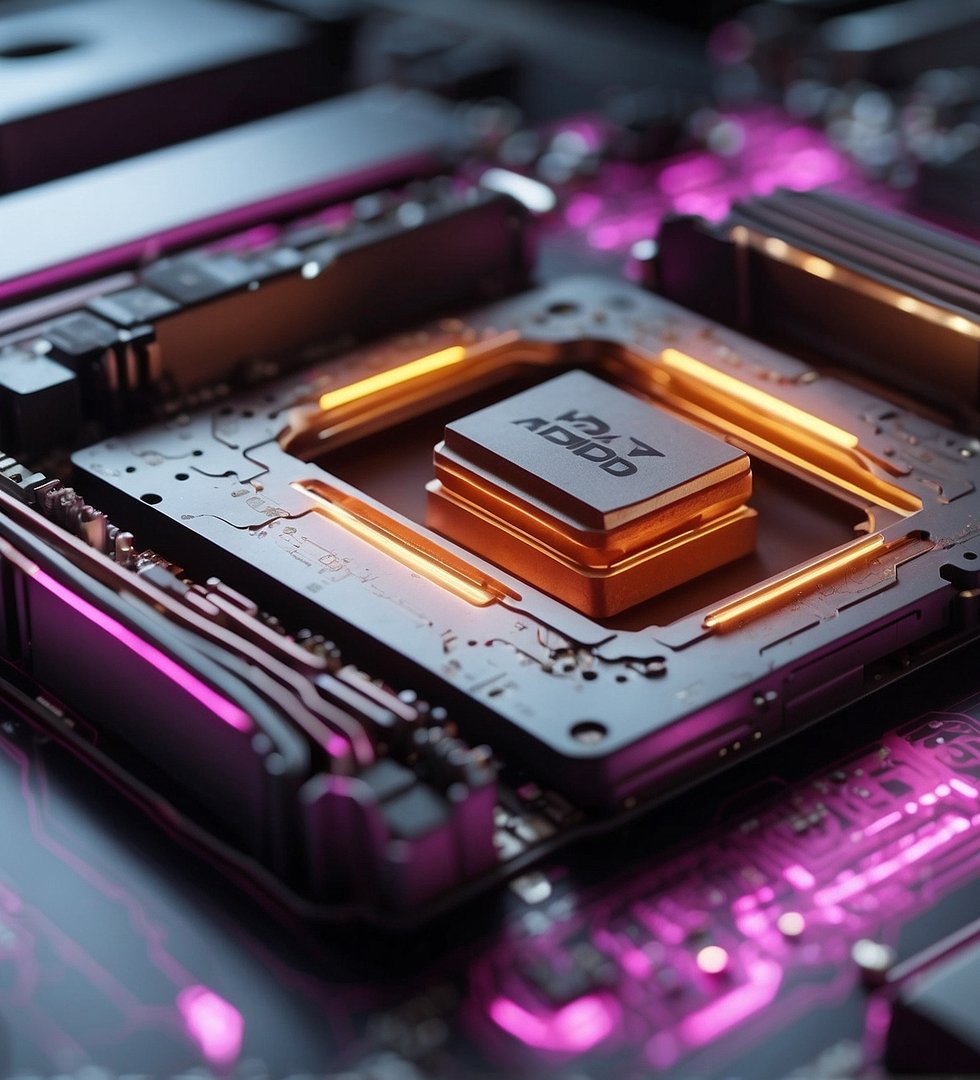

In the ever-evolving realm of data-driven computing, organizations are constantly seeking new ways to harness the transformative power of artificial intelligence (AI) and high-performance computing (HPC). To meet these burgeoning demands, AMD has unveiled the groundbreaking AMD Instinct™ MI300X Accelerator, a cutting-edge, industry-standard accelerator module that redefines the boundaries of performance and innovation.

**AI and HPC Acceleration at Its Apex**

The AMD Instinct™ MI300X Accelerator is built upon the revolutionary AMD CDNA™ 3 architecture, meticulously engineered to deliver unparalleled performance and efficiency for AI, ML, and HPC workloads. It boasts an arsenal of cutting-edge features, including:

* **304 High-Throughput Compute Units:** This prodigious array of compute units unleashes immense processing power, enabling the MI300X to tackle the most demanding AI and HPC tasks with remarkable efficiency.

* **AI-Specific Functions:** Empower your AI and ML applications with a suite of specialized functions, including new data-type support, photo and video decoding, and seamless integration with leading AI frameworks.

* **Unprecedented Memory Capacity:** Access up to an astonishing 192 GB of HBM3 memory, providing ample bandwidth and storage to handle even the most memory-intensive AI training and inference workloads.

The AMD Instinct™ MI300X discrete GPU is based on next-generation AMD CDNA™ 3 architecture, delivering leadership efficiency and performance for the most demanding AI and HPC applications. It is designed with 304 high- throughput compute units, AI-specific functions including new data-type support, photo and video decoding, plus an unprecedented 192 GB of HBM3 memory on a GPU accelerator. Using state-of-the-art die stacking and chiplet technology in a multi-chip package propels generative AI, machine learning, and inferencing, while extending AMD leadership in HPC acceleration. The MI300X offers outstanding performance to our prior generation that is already powering the fastest exabyte-class supercomputer1, offering 13.7x the peak AI/ML workload performance using FP8 with sparsity compared to prior AMD MI250X* accelerators using FP16MI300-16 and a 3.4x peak advantage for HPC workloads on FP32 calculations.

**Designed for Efficiency and Scalability**

The AMD Instinct™ MI300X Accelerator is not just about raw power; it's also about achieving peak performance with minimal energy consumption. Key features that contribute to its exceptional efficiency include:

* **State-of-the-Art Die Stacking and Chiplet Technology:** This cutting-edge approach enables the MI300X to integrate multiple compute units and memory modules on a single chip, reducing power consumption and heat generation.

* **AMD Infinity Fabric™ Technology:** Seamlessly connect multiple MI300X accelerators for unparalleled scalability, ensuring efficient data transfer and collaboration across multi-GPU systems.

* **SR-IOV for up to 8 Partitions:** Divide the accelerator into multiple virtual partitions to optimize resource allocation for specific workloads, maximizing efficiency and flexibility.

**Unlocking the Potential of AMD ROCm™ Software**

To fully harness the capabilities of the AMD Instinct™ MI300X Accelerator, AMD has developed the open-source AMD ROCm™ software platform. This comprehensive toolkit provides a unified environment for developing, deploying, and managing AMD Instinct accelerators, making it easier than ever to build and deploy AI and HPC applications on AMD hardware.

**Accelerating Your Next Breakthrough**

The AMD Instinct™ MI300X Accelerator is the ultimate weapon for organizations seeking to push the boundaries of AI, HPC, and innovation. With its unparalleled performance, efficiency, scalability, and open-source software ecosystem, the MI300X empowers you to:

* **Unleash the full potential of AI to solve complex problems, discover new insights, and drive breakthroughs in various industries.**

* **Accelerate HPC simulations for scientific research, weather forecasting, and other computationally intensive applications.**

01.Requirements

02. Usage

Timestamps for this AMD Advancing AI Event Supercut:

00:00 Investing in Artificial Intelligence

01:49 Launch - AMD MI300X GPU

04:35 AMD MI300X vs Nvidia H100

08:54 AMD 8 GPU Platform vs Nvidia DGX H100

11:26 AMD ROCm vs Nvidia CUDA

14:36 AMD Networking vs Nvidia NVLink & InfiniBand

17:49 Launch - AMD MI300A APU

20:04 AMD MI300A vs Nvidia H100

22:35 Launch - AMD Ryzen 8040 AI PC CPUs

24:37 AMD Ryzen 8945 vs Intel Core i9 13900H

* **Transform your data center into a powerhouse of AI and HPC innovation, enabling you to stay ahead of the competition.**

**Embrace the Future of AI and HPC**

With the AMD Instinct™ MI300X Accelerator and AMD ROCm™ software, you have the power to revolutionize your organization's data-driven endeavors. Join the vanguard of innovation and unleash the transformative potential of AI, HPC, and the AMD Instinct™ MI300X Accelerator.

Providing assistance

The web assistant should be able to provide quick and effective solutions to the user's queries, and help them navigate the website with ease.

Personalization

The Web assistant is more then able to personalize the user's experience by understanding their preferences and behavior on the website.

Troubleshooting

The Web assistant can help users troubleshoot technical issues, such as broken links, page errors, and other technical glitches.

Please log in to gain access on Is The new AMD'S HUGE AI Chip Announcements The Nvidia Killer? file .